If I ask one of my best friends if he’s seen a movie, he’ll answer “yes,” followed by “I mean, I read the Wikipedia plot summary.” This drives me crazy. It’s also very funny, which is why he does it, but I get to argue the role of “Noooo, to understand the art you have to engage with it.”

I have another one of my closest friends who will join the debate, indignant at my friend, and say “The Wikipedia summary is lifeless, you have to read the Reddit synopsis to understand the emotions behind the people who see the movie.”

They’re both missing the entire point here, right? What, exactly, are they missing?

Stochastic Parrots

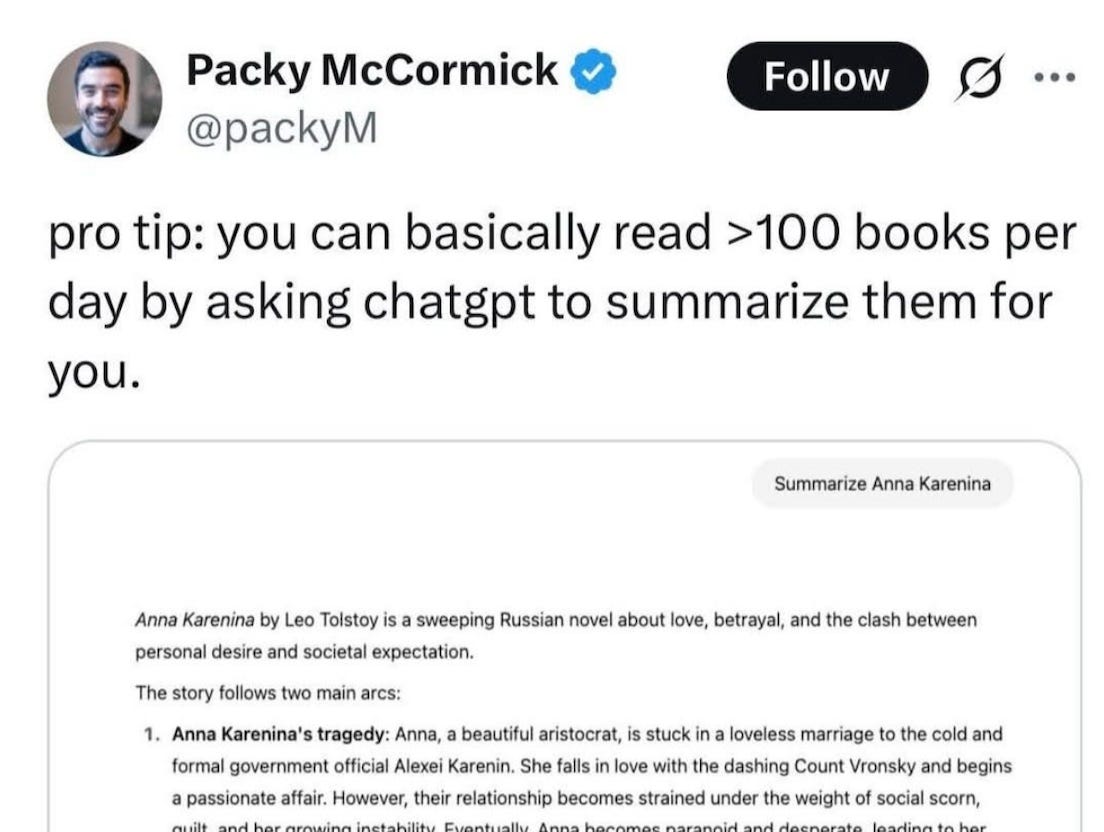

There’s some discourse around this tweet that

reposted on substack disapprovingly. I mean, it only has around 2k likes, which isn’t a ton (and it’s satire), but it sparked discourse in me so I’m going to talk about it.And right off the bat, before I get into the meat of the issue, I’m going to point out I think there is one valuable way you can read 100 books per day off of AI: if those books are educational.

If I’m struggling with a physics textbook, and I have a test coming up, so I use ChatGPT to give me simpler versions and analogies of the problems in the chapters so I can learn more efficiently, this strikes me as good! If a book has 20 examples for one problem and you use AI to give you one really good example to make you understand the problem immediately, and understand how the knowledge “fits in” to the other knowledge in the course, then you really have done the important understanding in the book.

Some may protest that AI in schools is a disaster, but that’s not because AI can’t explain the problem in an adequate way — the issue is that AI really does understand the problems well, and a student can use that to produce a good answer to pretty much anything. If a student asks ChatGPT for a question, and it produces 10 paragraphs about how it reached the answer, and the student’s eyes glaze over the paragraphs and copy and paste the answer into the quiz, then that’s the fault of the student. There was valuable learning to do there, but the student isn’t prioritizing the learning — if they were, then ChatGPT would be amazing! You can ask it how to explain specific parts you’re confused by; a textbook can’t do that. Anyone who’s used AI to really try to learn complicated topics will know it’s great.

So I think the issue here is indeed what we want out of non-educational books and media, and indeed the obvious word that I’m looking for is art. What are we looking to get out of art? What does AI miss out of art? Why can’t I use AI to summarize a famous book? Why would people be mad if I use AI to generate the next famous book?

Art is, like, meant to evoke emotions or whatever

I think there’s two very important reasons that art is different than educational content — one will sound quite shallow but is vital, and the other might be less vital but is our only hope of preserving some value in human art as AI gets better, and better, and better.

The first point is that art is meant for you, the consumer, to, like, feel things. Happiness, sadness, anger, ennui, and other European words1 too. This is true of “safer” art, like Marvel movies attempting to evoke triumph and laughter, and less “safe” art, like House of Leaves, which tries to give you a sense of claustrophobia, dread, and… other emotions. I don’t know what they are, but when I’m looking at a piece of art, I know that I’m feeling something. This is it, right? The artist is attempting to distill some emotion, like “love” or “serenity” and whatever emotion it makes you feel, that’s a success. “It’s meant to have some commentary about the human condition, suck you into a world.”

This is the very trivial point about why reading a summary isn’t reading a whole book: even if you know all the characters and plot synopsis, you’re not getting the all important emotions that the book is meant to evoke. This is unrelated to AI, and is just as much of an issue with reading a Wikipedia page or Sparknotes summary. “The journey is more important than the destination.” Ok, then let’s have AI generate a short poem or something that’s supposed to evoke the feeling of the book — what’s the problem with that? Better yet, just generate a book that’s better at evoking the vibe of the original story, a book that’s even deeper. That sounds more efficient. We only have so much time. But surely AI couldn’t be good at something like that…

This point, the one about evoking emotions, is a terrible, terrible point to hang your hat on as something that is “purely human” and irreplaceable by AI. For a long time, people have been decrying AI art as “slop” and how it would be forever unable to capture the nuances of real art, and for years I’ve warned that you do not want to hang your hat on AI art being bad because the literal quality is poor. The AI art we have today is going to be the worst we’ll ever have at evoking emotions, literal quality of artwork, and so forth. I think this point was much stronger and more controversial two years ago, but we live in a world now where people can’t tell if something is AI art or not and AI art can be rated higher than human art.

You can point to some ineffable “human richness” as to why AI art will never be better than humans, but if you can’t tell whether a piece is done by a human or robot, then I’m forced to treat you like those people who say they’re allergic to 5G but can’t tell if the router in the room with them is on. “So far, every time people have claimed there’s something an AI can never do without ‘real understanding’, the AI has accomplished it with better pattern-matching,” from this article. And what’s gonna happen when an AI crams 5,000 microrichness points and hidden flourishes into the piece it makes because it can do operations at the speed of light and your neurons fire 1,000 times slower? No, there’s no hidden richness that an AI cannot replicate. An AI could write the next great American novel, and the people who say that’s just too complicated for it are batting 0.000 right now.

But the second reason, I think, could save us.

“I thought AI would do the data analytics and leave the art to us”

In the beforetimes, when ChatGPT was just a twinkle in Sam Altman’s eyes, it was thought an AI would never be able to understand the human language. That it would never be able to make art. Fields like evolutionary computation assumed it was best suited for data analysis, because… well, duh! It’s a robot and numbers are spreadsheets! And it’s also good there, of course, but ChatGPT changed the whole game. As art and writing became the first barriers to fall, there was a sense of indignance: “I thought AI would automate all the hard stuff in society, and we would live in a beautiful post scarcity world where we could paint pictures of butterflies all day.” Well, I want to talk about

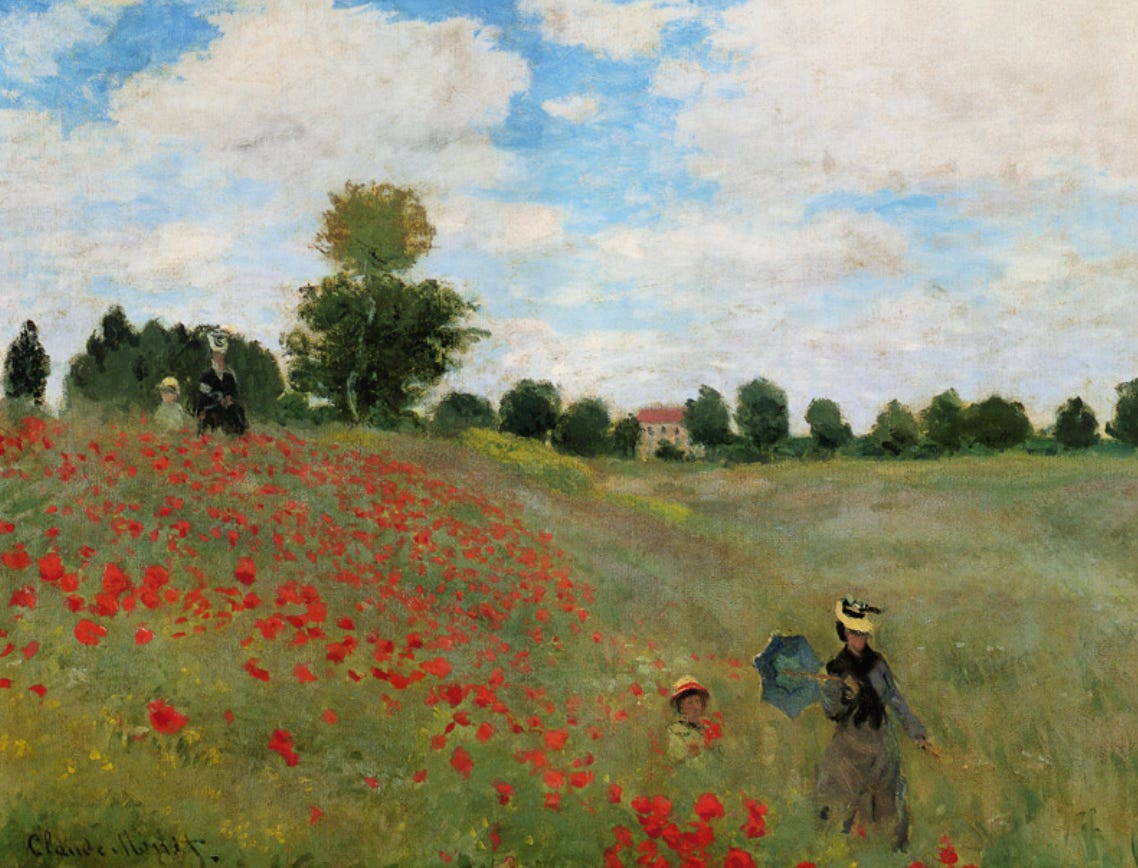

’s comment on the tweet:This is a funny reply, but in a certain sense… isn’t this one kinda true? As Scott Alexander puts it in the fantastic Colors of Her Coat:

What about cameras? A whole industry of portraits, landscapes, cityscapes - totally destroyed. If you wanted to know what Paris looked like, no need to choose between Manet’s interpretation or Beraud’s interpretation or anyone else’s - just glance at a photo. A Frenchman with a camera could generate a hundred pictures of Paris a day, each as cold and perspectiveless as mathematical truth. The artists, defeated, retreated into Impressionism, or Cubism, or painting a canvas entirely blue and saying it represented Paris in some deeper sense. You could still draw the city true-to-life if you wanted. But it would just be more Paris.

There’s a certain ideal in which AI would pack all the useful bits of a book into a short summary, just like a photo packs all the useful bits of a place into an easy format. It’d tell you all the emotions you would have felt, just as if you remembered a book you read long ago. Sure, you wouldn’t get the full experience in real time, but maybe an AI could summarize the feeling of a book into a more compressible format — it’s right there in the name, “generative.” Maybe the issue with the AI book summaries is that they need to also tell you what feeling the book is trying to express, and if they did that, you really wouldn’t have to read it at all — plot summary? check. emotions? check. It’d be as if you finished a book a year ago, and were looking back at it wistfully.

Except no, this is all crazy, right? Here it is, my second reason I want humans making art, and it’s the exact opposite of “death of the artist”: I care that there is a conscious being who made it. I care that I can catch a glimpse of a human’s mind and peel it open, and even if I don’t know if a human made the art or not, I care if someone did! AI slop is not slop because it’s bad, it’s slop because it’s not real, because a conscious being breathing life into a piece makes it real. I care about conscious beings more than anything else in the universe. I want them to be happy, fulfilled, and not in pain, and I truly mean it when I say nothing else in the universe matters.

So even if an AI can generate 1,000 pieces more thoughtful, evocative, and deep than one by a human, if there’s nothing behind the pixels I’m looking at, if no one is feeling anything except the consumer, then it’s not art. There’s something about a piece that takes 50 years of human contemplation that makes it better art than something chosen from 100 separate images with the same prompt. So even if an AI can compress the entire feeling of a book into a paragraph, even if it can generate masterpieces and wonders the likes of which I’ve never seen, as long as there are still humans who want to make art, I think their art matters more.

…

I think even the most well crafted Wikipedia summary, or parroting of a movie’s points on Reddit, or incredible summary evoking the same vibes as the original, falls short as art if there’s not a human putting emotions behind it2. My friends are missing the point. I think the reason a book summary falls short is not because it doesn’t evoke the plot, not because it doesn’t evoke the emotions, not that it’s not long enough, but because the book was crafted by someone, anyone. I’m not against AI; it’s amazing and one of the best tools humanity has ever invented, it will only get better and people doubting its ability to craft impressive works will be wrong, and I’m sure in the future humans will be so stunlocked by constant wonders beyond their imagination that they won’t even care about making art or something.

But as long as humans do still want to make art, I think you owe it to them to look at what they made, not just the bullet point list.

Subscribe using this button and like if you enjoyed and want to see more. Thank you.

schadenfreude, uhhhhh, déjà vu, uhhhhh…. I’m drawing a blank… je ne sais quoi? That’s kinda an emotion. Is that all?

This can get into the weeds when some of it is made by a human and some by an AI, but I think that’s a gray area you can take on a piece by piece basis.

Interesting topic. I feel like it's an uncharacteristically weak argument of yours though, perhaps you just meant to provoke discussion?

Essentially, you're saying:

1) AI capabilities will soon be such that nobody will be able tell the difference between human artistic output and AI generated content

2) but that you don't consider that art because "if there’s nothing behind the pixels I’m looking at, if no one is feeling anything except the consumer, then it’s not art" and "even if an AI can compress the entire feeling of a book into a paragraph, even if it can generate masterpieces and wonders the likes of which I’ve never seen, as long as there are still humans who want to make art, I think their art matters more."

But that second point is purely a subjective view, and one that could be criticized by your own logic "if you can’t tell whether a piece is done by a human or robot, then I’m forced to treat you like those people who say they’re allergic to 5G but can’t tell if the router in the room with them is on." AI generated art is trained on human output, is it not conceivable that the same emotion in the human art is captured in the AI, even if unintentionally? Is there any difference?

If I learned to identically copy a master's piano piece, even though I did not feel the emotion of his recording, who are you to say whether or not what I am producing is art? You wouldn't even be able to tell whether it was me or him playing. AI could easily be the same.

Re: visiting 100 foreign countries using Google Street View - you do get some of the benefit of travel with something like that (and reading about other people’s travels, watching their travel vlogs, etc.), but there’s still an effable experience of actually physically being in a place and living amongst its people that cannot be replicated through mere distant observation. I’m reminded of the Mary’s Room philosophical thought experiment - you can learn as much as you want about the science of color, but none of it will let you know what it feels like to actually see color.